I mentioned in the memory re-ordering entry that I did some disassembly of a mutex. I actually did two disassemblies, one with a lock guard and one without. Here’s what I found.

I used this C++ code for the non-gaurded mutex, which successfully ensures that each thread has access to a shared resource without fear of another thread interfering

... std::mutex mutex; //declared at global scope ... mutex.lock(); //executed within a threaded function ... mutex.unlock(); ...

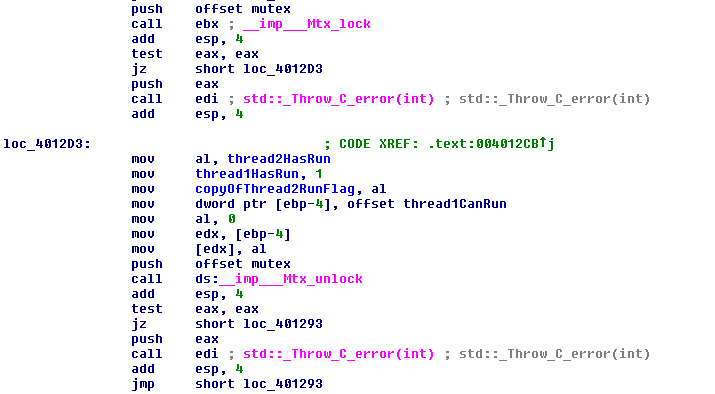

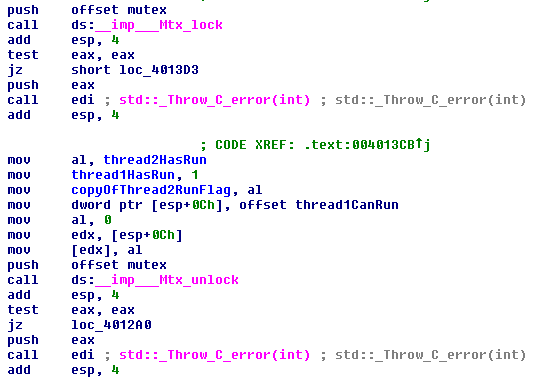

The x86 for this is different in one major way from the x86 we saw in the primary post, and that’s because we are calling into the data segment here.

The code calls the _imp__Mtx_lock instruction then pops the last frame off the stack (that’s effectively what add esp, 4 does. There is actually a small difference.) and then checks if the return value is zero or not (error). If it’s zero then we jump down to the instructions we saw in the previous post (with a compiler reordering), otherwise we throw an exception. We see the same pattern of calling the function, checking the result and maybe throwing an exception in the __imp___Mtx_unlock section.

If we look in the data segment we see the following for the mutex.

It’s just a variable that’s cross referenced to our two threads, not terribly exciting. If we look at the extern segment we see both lock and unlock function calls. Just a refresher, extern is for declaring symbols which aren’t in the current assembly unit but are defined in some other unit that is going to be referenced by the current unit.

The mutex lock and unlock are kernel functions and this aside is meant to be a bit shorter than what it takes to do kernel disassembly. Let’s move on to the lock gaurded case. How do lock gaurds like this work.

... std::mutex mutex; ... std::lock_guard<std::mutex> lock(mutex); ...

Notice that the above code doesn’t contain an unlock statement. This would be an error if we were just using the mutex’s lock method because the mutex would never unlock. Looking at the disassembly below we see that the mutex does unlock when the function finishes.

I had half expected that the lock gaurd would do something fancy but it doesn’t seem be much more than insurance for unlocking the mutex. Yep, that’s the same code we saw above in the manual lock and unlock cases. If you’d like to explore the exciting world of kernel mutexs, take a look at this C code for the linux mutex.